Updated: 2023-10-31 23:11:09

: Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Operator learning with PCA-Net : upper and lower complexity bounds Samuel Lanthaler 24(318 1 67, 2023. Abstract PCA-Net is a recently proposed neural operator architecture which combines principal component analysis PCA with neural networks to approximate operators between infinite-dimensional function spaces . The present work develops approximation theory for this approach , improving and significantly extending previous work in this direction : First , a novel universal approximation result is derived , under minimal assumptions on the underlying operator and the data-generating distribution .

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Mixed Regression via Approximate Message Passing Nelvin Tan , Ramji Venkataramanan 24(317 1 44, 2023. Abstract We study the problem of regression in a generalized linear model GLM with multiple signals and latent variables . This model , which we call a matrix GLM , covers many widely studied problems in statistical learning , including mixed linear regression , max-affine regression , and mixture-of-experts . The goal in all these problems is to estimate the signals , and possibly some of the latent variables , from the observations . We propose a novel approximate message passing AMP algorithm for

Updated: 2023-10-31 23:11:09

: Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us MARLlib : A Scalable and Efficient Multi-agent Reinforcement Learning Library Siyi Hu , Yifan Zhong , Minquan Gao , Weixun Wang , Hao Dong , Xiaodan Liang , Zhihui Li , Xiaojun Chang , Yaodong Yang 24(315 1 23, 2023. Abstract A significant challenge facing researchers in the area of multi-agent reinforcement learning MARL pertains to the identification of a library that can offer fast and compatible development for multi-agent tasks and algorithm combinations , while obviating the need to consider compatibility issues . In this paper , we present MARLlib , a library designed to address the

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Scalable Real-Time Recurrent Learning Using Columnar-Constructive Networks Khurram Javed , Haseeb Shah , Richard S . Sutton , Martha White 24(256 1 34, 2023. Abstract Constructing states from sequences of observations is an important component of reinforcement learning agents . One solution for state construction is to use recurrent neural networks . Back-propagation through time BPTT and real-time recurrent learning RTRL are two popular gradient-based methods for recurrent learning . BPTT requires complete trajectories of observations before it can compute the gradients and is unsuitable for online

Updated: 2023-10-31 23:11:09

: Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Torchhd : An Open Source Python Library to Support Research on Hyperdimensional Computing and Vector Symbolic Architectures Mike Heddes , Igor Nunes , Pere Vergés , Denis Kleyko , Danny Abraham , Tony Givargis , Alexandru Nicolau , Alexander Veidenbaum 24(255 1 10, 2023. Abstract Hyperdimensional computing HD also known as vector symbolic architectures VSA is a framework for computing with distributed representations by exploiting properties of random high-dimensional vector spaces . The commitment of the scientific community to aggregate and disseminate research in this particularly

Updated: 2023-10-31 23:11:09

, , Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Fast Expectation Propagation for Heteroscedastic , Lasso-Penalized , and Quantile Regression Jackson Zhou , John T . Ormerod , Clara Grazian 24(314 1 39, 2023. Abstract Expectation propagation EP is an approximate Bayesian inference ABI method which has seen widespread use across machine learning and statistics , owing to its accuracy and speed . However , it is often difficult to apply EP to models with complex likelihoods , where the EP updates do not have a tractable form and need to be calculated using methods such as multivariate numerical quadrature . These methods increase run time and

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Adaptive False Discovery Rate Control with Privacy Guarantee Xintao Xia , Zhanrui Cai 24(252 1 35, 2023. Abstract Differentially private multiple testing procedures can protect the information of individuals used in hypothesis tests while guaranteeing a small fraction of false discoveries . In this paper , we propose a differentially private adaptive FDR control method that can control the classic FDR metric exactly at a user-specified level alpha$ with a privacy guarantee , which is a non-trivial improvement compared to the differentially private Benjamini-Hochberg method proposed in Dwork et al .

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Convex Reinforcement Learning in Finite Trials Mirco Mutti , Riccardo De Santi , Piersilvio De Bartolomeis , Marcello Restelli 24(250 1 42, 2023. Abstract Convex Reinforcement Learning RL is a recently introduced framework that generalizes the standard RL objective to any convex or concave function of the state distribution induced by the agent's policy . This framework subsumes several applications of practical interest , such as pure exploration , imitation learning , and risk-averse RL , among others . However , the previous convex RL literature implicitly evaluates the agent's performance over

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Prediction Equilibrium for Dynamic Network Flows Lukas Graf , Tobias Harks , Kostas Kollias , Michael Markl 24(310 1 33, 2023. Abstract We study a dynamic traffic assignment model , where agents base their instantaneous routing decisions on real-time delay predictions . We formulate a mathematically concise model and define dynamic prediction equilibrium DPE in which no agent can at any point during their journey improve their predicted travel time by switching to a different route . We demonstrate the versatility of our framework by showing that it subsumes the well-known full information and

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Dimension Reduction and MARS Yu Liu LIU , Degui Li , Yingcun Xia 24(309 1 30, 2023. Abstract The multivariate adaptive regression spline MARS is one of the popular estimation methods for nonparametric multivariate regression . However , as MARS is based on marginal splines , to incorporate interactions of covariates , products of the marginal splines must be used , which often leads to an unmanageable number of basis functions when the order of interaction is high and results in low estimation efficiency . In this paper , we improve the performance of MARS by using linear combinations of the

Updated: 2023-10-31 23:11:09

: Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Nevis'22 : A Stream of 100 Tasks Sampled from 30 Years of Computer Vision Research Jorg Bornschein , Alexandre Galashov , Ross Hemsley , Amal Rannen-Triki , Yutian Chen , Arslan Chaudhry , Xu Owen He , Arthur Douillard , Massimo Caccia , Qixuan Feng , Jiajun Shen , Sylvestre-Alvise Rebuffi , Kitty Stacpoole , Diego de las Casas , Will Hawkins , Angeliki Lazaridou , Yee Whye Teh , Andrei A . Rusu , Razvan Pascanu , Marc’Aurelio Ranzato 24(308 1 77, 2023. Abstract A shared goal of several machine learning communities like continual learning , meta-learning and transfer learning , is to design

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Fast Screening Rules for Optimal Design via Quadratic Lasso Reformulation Guillaume Sagnol , Luc Pronzato 24(307 1 32, 2023. Abstract The problems of Lasso regression and optimal design of experiments share a critical property : their optimal solutions are typically sparse , i.e . only a small fraction of the optimal variables are non-zero . Therefore , the identification of the support of an optimal solution reduces the dimensionality of the problem and can yield a substantial simplification of the calculations . It has recently been shown that linear regression with a squared ell_1$-norm

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Graph Attention Retrospective Kimon Fountoulakis , Amit Levi , Shenghao Yang , Aseem Baranwal , Aukosh Jagannath 24(246 1 52, 2023. Abstract Graph-based learning is a rapidly growing sub-field of machine learning with applications in social networks , citation networks , and bioinformatics . One of the most popular models is graph attention networks . They were introduced to allow a node to aggregate information from features of neighbor nodes in a non-uniform way , in contrast to simple graph convolution which does not distinguish the neighbors of a node . In this paper , we theoretically study the

Updated: 2023-10-31 23:11:09

: Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Confidence Intervals and Hypothesis Testing for High-dimensional Quantile Regression : Convolution Smoothing and Debiasing Yibo Yan , Xiaozhou Wang , Riquan Zhang 24(245 1 49, 2023. Abstract ell_1$-penalized quantile regression ell_1$-QR is a useful tool for modeling the relationship between input and output variables when detecting heterogeneous effects in the high-dimensional setting . Hypothesis tests can then be formulated based on the debiased ell_1$-QR estimator that reduces the bias induced by Lasso penalty . However , the non-smoothness of the quantile loss brings great challenges to the

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Selection by Prediction with Conformal p-values Ying Jin , Emmanuel J . Candes 24(244 1 41, 2023. Abstract Decision making or scientific discovery pipelines such as job hiring and drug discovery often involve multiple stages : before any resource-intensive step , there is often an initial screening that uses predictions from a machine learning model to shortlist a few candidates from a large pool . We study screening procedures that aim to select candidates whose unobserved outcomes exceed user-specified values . We develop a method that wraps around any prediction model to produce a subset of

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Sparse Graph Learning from Spatiotemporal Time Series Andrea Cini , Daniele Zambon , Cesare Alippi 24(242 1 36, 2023. Abstract Outstanding achievements of graph neural networks for spatiotemporal time series analysis show that relational constraints introduce an effective inductive bias into neural forecasting architectures . Often , however , the relational information characterizing the underlying data-generating process is unavailable and the practitioner is left with the problem of inferring from data which relational graph to use in the subsequent processing stages . We propose novel ,

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Improved Powered Stochastic Optimization Algorithms for Large-Scale Machine Learning Zhuang Yang 24(241 1 29, 2023. Abstract Stochastic optimization , especially stochastic gradient descent SGD is now the workhorse for the vast majority of problems in machine learning . Various strategies , e.g . control variates , adaptive learning rate , momentum technique , etc . have been developed to improve canonical SGD that is of a low convergence rate and the poor generalization in practice . Most of these strategies improve SGD that can be attributed to control the updating direction e.g . gradient descent

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Two Sample Testing in High Dimension via Maximum Mean Discrepancy Hanjia Gao , Xiaofeng Shao 24(304 1 33, 2023. Abstract Maximum Mean Discrepancy MMD has been widely used in the areas of machine learning and statistics to quantify the distance between two distributions in the p$-dimensional Euclidean space . The asymptotic property of the sample MMD has been well studied when the dimension p$ is fixed using the theory of U-statistic . As motivated by the frequent use of MMD test for data of moderate high dimension , we propose to investigate the behavior of the sample MMD in a high-dimensional

Updated: 2023-10-31 23:11:09

We study the ranking of individuals, teams, or objects, based on pairwise comparisons between them, using the Bradley-Terry model. Estimates of rankings within this model are commonly made using a simple iterative algorithm first introduced by Zermelo almost a century ago. Here we describe an alternative and similarly simple iteration that provably returns identical results but does so much faster—over a hundred times faster in some cases. We demonstrate this algorithm with applications to a range of example data sets and derive a number of results regarding its convergence.

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Scalable Computation of Causal Bounds Madhumitha Shridharan , Garud Iyengar 24(237 1 35, 2023. Abstract We consider the problem of computing bounds for causal queries on causal graphs with unobserved confounders and discrete valued observed variables , where identifiability does not hold . Existing non-parametric approaches for computing such bounds use linear programming LP formulations that quickly become intractable for existing solvers because the size of the LP grows exponentially in the number of edges in the causal graph . We show that this LP can be significantly pruned , allowing us to

Updated: 2023-10-31 23:11:09

We consider the linear discriminant analysis problem in the high-dimensional settings. In this work, we propose PANDA(PivotAl liNear Discriminant Analysis), a tuning insensitive method in the sense that it requires very little effort to tune the parameters. Moreover, we prove that PANDA achieves the optimal convergence rate in terms of both the estimation error and misclassification rate. Our theoretical results are backed up by thorough numerical studies using both simulated and real datasets. In comparison with the existing methods, we observe that our proposed PANDA yields equal or better performance, and requires substantially less effort in parameter tuning.

Updated: 2023-10-31 23:11:09

: Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us MultiZoo and MultiBench : A Standardized Toolkit for Multimodal Deep Learning Paul Pu Liang , Yiwei Lyu , Xiang Fan , Arav Agarwal , Yun Cheng , Louis-Philippe Morency , Ruslan Salakhutdinov 24(234 1 7, 2023. Abstract Learning multimodal representations involves integrating information from multiple heterogeneous sources of data . In order to accelerate progress towards understudied modalities and tasks while ensuring real-world robustness , we release MultiZoo , a public toolkit consisting of standardized implementations of 20 core multimodal algorithms and MultiBench , a large-scale benchmark

Updated: 2023-10-31 23:11:09

In this paper, we study a learning problem in which a forecaster only observes partial information. By properly rescaling the problem, we heuristically derive a limiting PDE on Wasserstein space which characterizes the asymptotic behavior of the regret of the forecaster. Using a verification type argument, we show that the problem of obtaining regret bounds and efficient algorithms can be tackled by finding appropriate smooth sub/supersolutions of this parabolic PDE.

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Statistical Comparisons of Classifiers by Generalized Stochastic Dominance Christoph Jansen , Malte Nalenz , Georg Schollmeyer , Thomas Augustin 24(231 1 37, 2023. Abstract Although being a crucial question for the development of machine learning algorithms , there is still no consensus on how to compare classifiers over multiple data sets with respect to several criteria . Every comparison framework is confronted with at least three fundamental challenges : the multiplicity of quality criteria , the multiplicity of data sets and the randomness of the selection of data sets . In this paper , we add a

Updated: 2023-10-31 23:11:09

This paper investigates the sample complexity of learning a distributionally robust predictor under a particular distributional shift based on $\chi^2$-divergence, which is well known for its computational feasibility and statistical properties. We demonstrate that any hypothesis class $\mathcal{H}$ with finite VC dimension is distributionally robustly learnable. Moreover, we show that when the perturbation size is smaller than a constant, finite VC dimension is also necessary for distributionally robust learning by deriving a lower bound of sample complexity in terms of VC dimension.

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Interpretable and Fair Boolean Rule Sets via Column Generation Connor Lawless , Sanjeeb Dash , Oktay Gunluk , Dennis Wei 24(229 1 50, 2023. Abstract This paper considers the learning of Boolean rules in disjunctive normal form DNF , OR-of-ANDs , equivalent to decision rule sets as an interpretable model for classification . An integer program is formulated to optimally trade classification accuracy for rule simplicity . We also consider the fairness setting and extend the formulation to include explicit constraints on two different measures of classification parity : equality of opportunity and

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Autoregressive Networks Binyan Jiang , Jialiang Li , Qiwei Yao 24(227 1 69, 2023. Abstract We propose a first-order autoregressive i.e . AR(1 model for dynamic network processes in which edges change over time while nodes remain unchanged . The model depicts the dynamic changes explicitly . It also facilitates simple and efficient statistical inference methods including a permutation test for diagnostic checking for the fitted network models . The proposed model can be applied to the network processes with various underlying structures but with independent edges . As an illustration , an AR(1

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us High-Dimensional Inference for Generalized Linear Models with Hidden Confounding Jing Ouyang , Kean Ming Tan , Gongjun Xu 24(296 1 61, 2023. Abstract Statistical inferences for high-dimensional regression models have been extensively studied for their wide applications ranging from genomics , neuroscience , to economics . However , in practice , there are often potential unmeasured confounders associated with both the response and covariates , which can lead to invalidity of standard debiasing methods . This paper focuses on a generalized linear regression framework with hidden confounding and

Updated: 2023-10-31 23:11:09

: Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Merlion : End-to-End Machine Learning for Time Series Aadyot Bhatnagar , Paul Kassianik , Chenghao Liu , Tian Lan , Wenzhuo Yang , Rowan Cassius , Doyen Sahoo , Devansh Arpit , Sri Subramanian , Gerald Woo , Amrita Saha , Arun Kumar Jagota , Gokulakrishnan Gopalakrishnan , Manpreet Singh , K C Krithika , Sukumar Maddineni , Daeki Cho , Bo Zong , Yingbo Zhou , Caiming Xiong , Silvio Savarese , Steven Hoi , Huan Wang 24(226 1 6, 2023. Abstract We introduce Merlion , an open-source machine learning library for time series . It features a unified interface for many commonly used models and datasets for

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Augmented Transfer Regression Learning with Semi-non-parametric Nuisance Models Molei Liu , Yi Zhang , Katherine P . Liao , Tianxi Cai 24(293 1 50, 2023. Abstract We develop an augmented transfer regression learning ATReL approach that introduces an imputation model to augment the importance weighting equation to achieve double robustness for covariate shift correction . More significantly , we propose a novel semi-non-parametric SNP construction framework for the two nuisance models . Compared with existing doubly robust approaches relying on fully parametric or fully non-parametric machine learning

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Conditional Distribution Function Estimation Using Neural Networks for Censored and Uncensored Data Bingqing Hu , Bin Nan 24(223 1 26, 2023. Abstract Most work in neural networks focuses on estimating the conditional mean of a continuous response variable given a set of covariates . In this article , we consider estimating the conditional distribution function using neural networks for both censored and uncensored data . The algorithm is built upon the data structure particularly constructed for the Cox regression with time-dependent covariates . Without imposing any model assumptions , we consider a

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Multi-source Learning via Completion of Block-wise Overlapping Noisy Matrices Doudou Zhou , Tianxi Cai , Junwei Lu 24(221 1 43, 2023. Abstract Electronic healthcare records EHR provide a rich resource for healthcare research . An important problem for the efficient utilization of the EHR data is the representation of the EHR features , which include the unstructured clinical narratives and the structured codified data . Matrix factorization-based embeddings trained using the summary-level co-occurrence statistics of EHR data have provided a promising solution for feature representation while

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us A Unified Framework for Factorizing Distributional Value Functions for Multi-Agent Reinforcement Learning Wei-Fang Sun , Cheng-Kuang Lee , Simon See , Chun-Yi Lee 24(220 1 32, 2023. Abstract In fully cooperative multi-agent reinforcement learning MARL settings , environments are highly stochastic due to the partial observability of each agent and the continuously changing policies of other agents . To address the above issues , we proposed a unified framework , called DFAC , for integrating distributional RL with value function factorization methods . This framework generalizes expected value

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us A Unified Analysis of Multi-task Functional Linear Regression Models with Manifold Constraint and Composite Quadratic Penalty Shiyuan He , Hanxuan Ye , Kejun He 24(291 1 69, 2023. Abstract This work studies the multi-task functional linear regression models where both the covariates and the unknown regression coefficients called slope functions are curves . For slope function estimation , we employ penalized splines to balance bias , variance , and computational complexity . The power of multi-task learning is brought in by imposing additional structures over the slope functions . We propose a

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Low Tree-Rank Bayesian Vector Autoregression Models Leo L Duan , Zeyu Yuwen , George Michailidis , Zhengwu Zhang 24(286 1 35, 2023. Abstract Vector autoregression has been widely used for modeling and analysis of multivariate time series data . In high-dimensional settings , model parameter regularization schemes inducing sparsity yield interpretable models and achieved good forecasting performance . However , in many data applications , such as those in neuroscience , the Granger causality graph estimates from existing vector autoregression methods tend to be quite dense and difficult to interpret ,

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Near-Optimal Weighted Matrix Completion Oscar López 24(283 1 40, 2023. Abstract Recent work in the matrix completion literature has shown that prior knowledge of a matrix's row and column spaces can be successfully incorporated into reconstruction programs to substantially benefit matrix recovery . This paper proposes a novel methodology that exploits more general forms of known matrix structure in terms of subspaces . The work derives reconstruction error bounds that are informative in practice , providing insight to previous approaches in the literature while introducing novel programs with reduced

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us The Bayesian Learning Rule Mohammad Emtiyaz Khan , Håvard Rue 24(281 1 46, 2023. Abstract We show that many machine-learning algorithms are specific instances of a single algorithm called the Bayesian learning rule . The rule , derived from Bayesian principles , yields a wide-range of algorithms from fields such as optimization , deep learning , and graphical models . This includes classical algorithms such as ridge regression , Newton's method , and Kalman filter , as well as modern deep-learning algorithms such as stochastic-gradient descent , RMSprop , and Dropout . The key idea in deriving such

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Sparse Markov Models for High-dimensional Inference Guilherme Ost , Daniel Y . Takahashi 24(279 1 54, 2023. Abstract Finite-order Markov models are well-studied models for dependent finite alphabet data . Despite their generality , application in empirical work is rare when the order d$ is large relative to the sample size n$ e.g . d mathcal{O n Practitioners rarely use higher-order Markov models because 1 the number of parameters grows exponentially with the order , 2 the sample size n$ required to estimate each parameter grows exponentially with the order , and 3 the interpretation is often

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Adaptive Clustering Using Kernel Density Estimators Ingo Steinwart , Bharath K . Sriperumbudur , Philipp Thomann 24(275 1 56, 2023. Abstract We derive and analyze a generic , recursive algorithm for estimating all splits in a finite cluster tree as well as the corresponding clusters . We further investigate statistical properties of this generic clustering algorithm when it receives level set estimates from a kernel density estimator . In particular , we derive finite sample guarantees , consistency , rates of convergence , and an adaptive data-driven strategy for choosing the kernel bandwidth . For

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us A Continuous-time Stochastic Gradient Descent Method for Continuous Data Kexin Jin , Jonas Latz , Chenguang Liu , Carola-Bibiane Schönlieb 24(274 1 48, 2023. Abstract Optimization problems with continuous data appear in , e.g . robust machine learning , functional data analysis , and variational inference . Here , the target function is given as an integral over a family of continuously indexed target functions---integrated with respect to a probability measure . Such problems can often be solved by stochastic optimization methods : performing optimization steps with respect to the indexed target

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Distributed Sparse Regression via Penalization Yao Ji , Gesualdo Scutari , Ying Sun , Harsha Honnappa 24(272 1 62, 2023. Abstract We study sparse linear regression over a network of agents , modeled as an undirected graph with no centralized node The estimation problem is formulated as the minimization of the sum of the local LASSO loss functions plus a quadratic penalty of the consensus constraint—the latter being instrumental to obtain distributed solution methods . While penalty-based consensus methods have been extensively studied in the optimization literature , their statistical and

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Causal Discovery with Unobserved Confounding and Non-Gaussian Data Y . Samuel Wang , Mathias Drton 24(271 1 61, 2023. Abstract We consider recovering causal structure from multivariate observational data . We assume the data arise from a linear structural equation model SEM in which the idiosyncratic errors are allowed to be dependent in order to capture possible latent confounding . Each SEM can be represented by a graph where vertices represent observed variables , directed edges represent direct causal effects , and bidirected edges represent dependence among error terms . Specifically , we assume

Updated: 2023-10-31 23:11:09

: , , Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Sharper Analysis for Minibatch Stochastic Proximal Point Methods : Stability , Smoothness , and Deviation Xiao-Tong Yuan , Ping Li 24(270 1 52, 2023. Abstract The stochastic proximal point SPP methods have gained recent attention for stochastic optimization , with strong convergence guarantees and superior robustness to the classic stochastic gradient descent SGD methods showcased at little to no cost of computational overhead added . In this article , we study a minibatch variant of SPP , namely M-SPP , for solving convex composite risk minimization problems . The core contribution is a set of

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Revisiting minimum description length complexity in overparameterized models Raaz Dwivedi , Chandan Singh , Bin Yu , Martin Wainwright 24(268 1 59, 2023. Abstract Complexity is a fundamental concept underlying statistical learning theory that aims to inform generalization performance . Parameter count , while successful in low-dimensional settings , is not well-justified for overparameterized settings when the number of parameters is more than the number of training samples . We revisit complexity measures based on Rissanen's principle of minimum description length MDL and define a novel MDL-based

Updated: 2023-10-31 23:11:09

: Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Sparse Plus Low Rank Matrix Decomposition : A Discrete Optimization Approach Dimitris Bertsimas , Ryan Cory-Wright , Nicholas A . G . Johnson 24(267 1 51, 2023. Abstract We study the Sparse Plus Low-Rank decomposition problem SLR which is the problem of decomposing a corrupted data matrix into a sparse matrix of perturbations plus a low-rank matrix containing the ground truth . SLR is a fundamental problem in Operations Research and Machine Learning which arises in various applications , including data compression , latent semantic indexing , collaborative filtering , and medical imaging . We

Updated: 2023-10-31 23:11:09

Home Page Papers Submissions News Editorial Board Special Issues Open Source Software Proceedings PMLR Data DMLR Transactions TMLR Search Statistics Login Frequently Asked Questions Contact Us Distributed Algorithms for U-statistics-based Empirical Risk Minimization Lanjue Chen , Alan T.K . Wan , Shuyi Zhang , Yong Zhou 24(263 1 43, 2023. Abstract Empirical risk minimization , where the underlying loss function depends on a pair of data points , covers a wide range of application areas in statistics including pairwise ranking and survival analysis . The common empirical risk estimator obtained by averaging values of a loss function over all possible pairs of observations is essentially a U-statistic . One well-known problem with minimizing U-statistic type empirical risks , is that the

Updated: 2023-10-26 18:30:53

, Membership Tutorials Courses Projects Newsletter Become a Member Log in Members Only Visualization Tools and Resources , October 2023 Roundup October 26, 2023 Topic The Process roundup Welcome to issue 262 of The Process the newsletter for FlowingData members where we look closer at how the charts get made . I’m Nathan Yau . Every month I collect useful things to help you make better charts . Here is the good stuff for October 2023. To access this issue of The Process , you must be a . member If you are already a member , log in here See What You Get The Process is a weekly newsletter on how visualization tools , rules , and guidelines work in practice . I publish every Thursday . Get it in your inbox or read it on FlowingData . You also gain unlimited access to hundreds of hours worth

Updated: 2023-10-20 16:16:45

Membership Tutorials Courses Projects Newsletter Become a Member Log in Google Maps and 3D experiments October 20, 2023 Topic Maps 3D Google Maps Houdini Robert Hodgin The Google Maps API lets you access high-resolution 3D map tiles now . Robert Hodgin has been experimenting with the new data source using Houdini which is 3D graphics software that might as well be black . magic Related Faking traffic on Google Maps with a wagon of 99 smartphones Google Maps incorrectly pointing people to crisis pregnancy centers Meandering procedural river maps Become a . member Support an independent site . Make great charts . See what you get Projects by FlowingData See All How Cause of Death Shifted with Age and Time in America As we get older , our life expectancy declines . But when and how quickly

Updated: 2023-10-16 06:56:36

In today’s data-driven world, data visualization simplifies complex information and empowers individuals to make informed decisions. One particularly valuable chart type is the Resource Chart, which facilitates efficient resource allocation. This tutorial will be your essential guide to creating dynamic resource charts using JavaScript. A resource chart is a type of Gantt chart that visualizes […]

The post How to Create Resource Chart with JavaScript appeared first on AnyChart News.

Updated: 2023-10-12 21:11:11

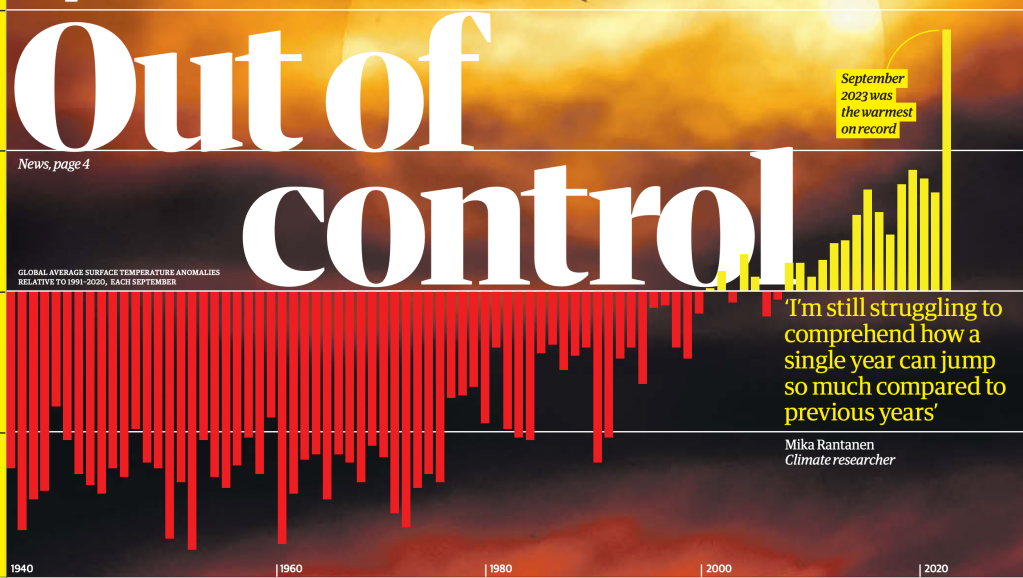

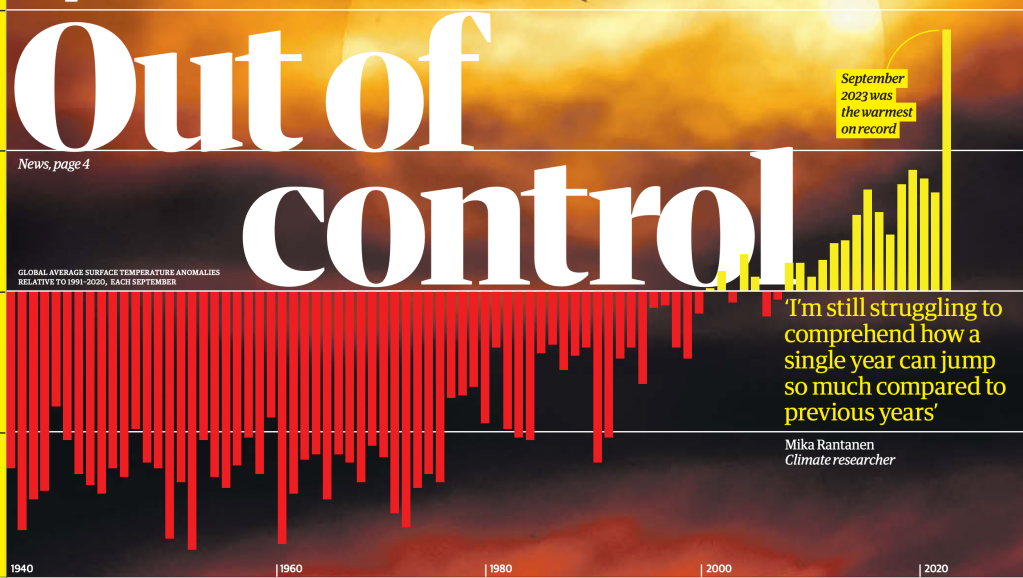

New Podcast: The Guardian’s Pamela Duncan and Ashley Kirk join me to talk about how data journalism has changed since I was there, how the news organisation works today and what is coming next. Music by TwoTone, based on data from this story about rising surface temperatures. You can hear the full (long) track here. … … Continue reading →

, Membership Tutorials Courses Projects Newsletter Become a Member Log in Members Only Visualization Tools and Resources , October 2023 Roundup October 26, 2023 Topic The Process roundup Welcome to issue 262 of The Process the newsletter for FlowingData members where we look closer at how the charts get made . I’m Nathan Yau . Every month I collect useful things to help you make better charts . Here is the good stuff for October 2023. To access this issue of The Process , you must be a . member If you are already a member , log in here See What You Get The Process is a weekly newsletter on how visualization tools , rules , and guidelines work in practice . I publish every Thursday . Get it in your inbox or read it on FlowingData . You also gain unlimited access to hundreds of hours worth

, Membership Tutorials Courses Projects Newsletter Become a Member Log in Members Only Visualization Tools and Resources , October 2023 Roundup October 26, 2023 Topic The Process roundup Welcome to issue 262 of The Process the newsletter for FlowingData members where we look closer at how the charts get made . I’m Nathan Yau . Every month I collect useful things to help you make better charts . Here is the good stuff for October 2023. To access this issue of The Process , you must be a . member If you are already a member , log in here See What You Get The Process is a weekly newsletter on how visualization tools , rules , and guidelines work in practice . I publish every Thursday . Get it in your inbox or read it on FlowingData . You also gain unlimited access to hundreds of hours worth Membership Tutorials Courses Projects Newsletter Become a Member Log in Google Maps and 3D experiments October 20, 2023 Topic Maps 3D Google Maps Houdini Robert Hodgin The Google Maps API lets you access high-resolution 3D map tiles now . Robert Hodgin has been experimenting with the new data source using Houdini which is 3D graphics software that might as well be black . magic Related Faking traffic on Google Maps with a wagon of 99 smartphones Google Maps incorrectly pointing people to crisis pregnancy centers Meandering procedural river maps Become a . member Support an independent site . Make great charts . See what you get Projects by FlowingData See All How Cause of Death Shifted with Age and Time in America As we get older , our life expectancy declines . But when and how quickly

Membership Tutorials Courses Projects Newsletter Become a Member Log in Google Maps and 3D experiments October 20, 2023 Topic Maps 3D Google Maps Houdini Robert Hodgin The Google Maps API lets you access high-resolution 3D map tiles now . Robert Hodgin has been experimenting with the new data source using Houdini which is 3D graphics software that might as well be black . magic Related Faking traffic on Google Maps with a wagon of 99 smartphones Google Maps incorrectly pointing people to crisis pregnancy centers Meandering procedural river maps Become a . member Support an independent site . Make great charts . See what you get Projects by FlowingData See All How Cause of Death Shifted with Age and Time in America As we get older , our life expectancy declines . But when and how quickly New Podcast: The Guardian’s Pamela Duncan and Ashley Kirk join me to talk about how data journalism has changed since I was there, how the news organisation works today and what is coming next. Music by TwoTone, based on data from this story about rising surface temperatures. You can hear the full (long) track here. … … Continue reading →

New Podcast: The Guardian’s Pamela Duncan and Ashley Kirk join me to talk about how data journalism has changed since I was there, how the news organisation works today and what is coming next. Music by TwoTone, based on data from this story about rising surface temperatures. You can hear the full (long) track here. … … Continue reading →